The Healthcare AI Trust Gap

Picture this: A patient walks into their doctor’s office and learns that an AI system will be involved in analyzing their symptoms, recommending a treatment plan, and monitoring their recovery. Nearly four in ten patients would walk away entirely.

That’s the paradox at the heart of healthcare AI adoption. While artificial intelligence now powers everything from electronic health records to diagnostic imaging and patient portals, a massive gap remains between what the technology can do and whether patients will actually use it.

The issue isn’t technical performance—it’s trust. Patients want AI that respects their autonomy, protects their data, and supports (not replaces!) their clinicians. Without those foundations, even the most advanced system risks rejection.

Our latest research, captured in the white paper Designing AI That Patients Trust, reveals what drives acceptance and what triggers resistance. The findings highlight one truth: success in ethical AI in healthcare depends not on smarter algorithms, but on building systems that patients feel confident choosing.

Why Nearly 40% of Patients Reject Healthcare AI

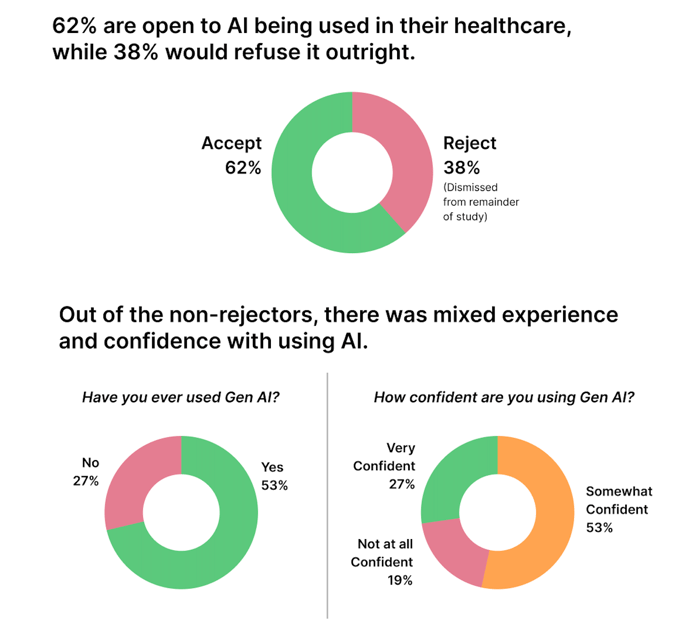

Our survey revealed a startling truth: 38.5% of patients said they would refuse healthcare AI under any circumstances. This isn’t just fear of innovation or mainstream skepticism. It is a deep concern about data use, autonomy, and clinical oversight.

Even among patients open to AI, confidence is shaky. Only 27% describe themselves as “very confident” using AI-driven tools. Most fall into the hesitant majority: 61.5% who might adopt AI in healthcare, but only if their concerns are directly addressed.

👉 See the full findings — download the white paper.

Why Healthcare AI Adoption Barriers Matter

These trust gaps aren’t abstract; they carry real costs:

- Investment risk: Health systems can spend millions on AI-powered tools, only to see adoption stall if patients refuse to engage.

- Clinical outcomes at stake: The most accurate diagnostic AI means little if patients don’t follow its recommendations. Trust directly affects adherence—already a $100B annual challenge.

- Competitive positioning: Organizations that solve the trust problem first will define ethical AI in healthcare and set the standards others must follow.

The takeaway is clear: Healthcare AI adoption depends less on technical capabilities than on patient confidence. Without trust, innovation stalls. With it, AI becomes a true competitive advantage.

Patient-Centered AI Design: What Builds Trust vs. What Destroys It

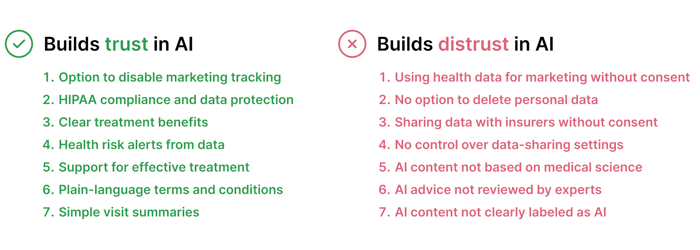

Patients aren’t asking healthcare AI to be perfect, but they’re asking it to be honest. Our research shows that healthcare AI adoption often comes down to a handful of design choices that either build confidence or guarantee rejection.

The Design Imperative

Ethical AI in healthcare must be engineered for trust from the start—not added as an afterthought. Organizations that prioritize transparency, control, and clinical validation reduce patient anxiety, improve adherence, and create a durable competitive edge.

The choice is clear: design for trust early, or spend years trying to rebuild credibility after patients reject your system.

The Psychology Behind Patient Decisions: Moral Foundations of Healthcare AI Trust

Imagine this: a 45-year-old patient receives an AI-generated treatment recommendation that’s clinically accurate. Yet she walks away without following it. Why? Because she never felt like she had a choice.

This scenario plays out daily in healthcare and highlights a critical blind spot. We’ve been designing AI for accuracy when we should be designing for human psychology.

Beyond Accuracy: How Patients Judge Healthcare AI

Patients don’t evaluate AI the same way they do other medical technologies. Instead, they judge it through deep-seated moral instincts, a pattern explained by social psychologist Jonathan Haidt’s Moral Foundations Theory:

- Autonomy (Liberty vs. Oppression): Do I have control? Can I opt out or adjust settings?

- Safety (Care vs. Harm): Will this protect me from harm and flag risks early?

- Fairness (Fairness vs. Cheating): Is this equitable, or biased toward certain groups

- Medical authority (Authority vs. Subversion): Is a licensed clinician behind this advice?

- Loyalty (Loyalty vs. Betrayal): Does this strengthen my relationship with providers

- Dignity (Sanctity vs. Degradation): Does this treat me like a human being, not just data?

Why This Matters for Healthcare AI Adoption

Patient resistance isn’t about fear of technology or lack of digital literacy—it’s about values alignment. The same patient who embraces AI scheduling may reject AI-generated care plans if they feel excluded from the decision-making process.

The lesson for healthcare leaders is simple: adoption depends on moral alignment, not just technical superiority. Organizations that design AI systems to respect autonomy, fairness, and dignity will earn trust where others fail.

Actionable Framework: Building Trustworthy and Ethical Healthcare AI Systems

Too many healthcare organizations approach AI backwards: they perfect the algorithm, check regulatory boxes, and only then wonder why patients won’t use it. Our research points to a different path: start with trust, then build everything else around it.

The TRUST Strategy for Healthcare AI Adoption

We’ve distilled our findings into five principles healthcare leaders can apply immediately:

- Transparency First – Make AI involvement obvious. Patients prefer clear labeling and plain explanations. Honesty about limitations builds credibility.

- Respect Patient Control – Give patients real power over their data: easy deletion, meaningful opt-outs, and privacy settings that actually work.

- Uphold Medical Standards – Show visible clinical validation. Patients trust AI more when they see a doctor’s endorsement and supporting evidence.

- Start Small, Scale Smart – Earn trust in low-stakes applications like scheduling or visit summaries before moving into diagnoses and treatments.

- Test and Iterate – Measure patient trust, not just usage. Collect feedback, survey confidence levels, and refine based on real-world experience.

Why This Framework Matters

Trust isn’t a “nice-to-have.” It’s the foundation of ethical AI in healthcare. Organizations that follow these principles reduce patient anxiety, improve adherence, and create sustainable competitive advantage.

The bottom line: build for trust from day one, or spend years trying to recover after patients reject your system.

Key Takeaways for Healthcare AI Leaders

The future of healthcare AI adoption won’t be defined by better algorithms—it will be defined by patient trust. While 38.5% of patients reject AI entirely, the rest remain hesitant, waiting to see if these systems respect their values. That’s not a technology gap—it’s a trust gap.

Market Leadership Through Trust

In healthcare AI, being first-to-market matters less than being first-to-trust. Early movers that embed transparency and patient control:

- Stay ahead of HIPAA, GDPR, and emerging regulations

- Attract top clinical talent who value ethical AI practices

- Win over investors looking for adoption-proof strategies

A 90-Day Plan to Build Trust

Winning organizations aren’t necessarily the most advanced—they’re the ones that move fastest on fundamentals. Start with:

- Audit for trust gaps: Walk through your AI tools as a skeptical patient. Is AI involvement obvious? Are the recommendations clear? Can users opt out easily?

- Fix the obvious: Add visible AI labeling, update privacy settings for real control, and ensure every recommendation shows medical validation.

- Measure what matters: Track patient trust metrics (e.g., confidence levels, voluntary adoption, and feedback) not just usage rates.

- Scale smart: Use trust signals to expand AI into higher-stakes areas. Pause or redesign features that trigger hesitation.

The Strategic Imperative

Trust isn’t a constraint on innovation—it’s the unlock for sustainable growth. The organizations that dominate ethical AI in healthcare won’t just build smarter algorithms. They’ll build patient-centered systems that people actively want to use.

Bottom line: Trust isn’t a side project. It’s the foundation of healthcare AI adoption—and the competitive advantage for leaders who get it right.

👉 See the full recommendations — download the white paper.

The Future Belongs to Trustworthy Healthcare AI

The future of AI in healthcare won’t be shaped by the smartest algorithms. It will be defined by whether patients trust these systems enough to use them.

Our research shows the stakes clearly: nearly 40% of patients reject healthcare AI entirely, and most others remain cautious. That makes ethical AI in healthcare the true competitive advantage. Organizations that lead with transparency, patient control, and visible medical oversight won’t just boost adoption—they’ll set the standards everyone else must follow.

But the window is closing fast. Early patient experiences are forming now, and once trust is lost, it’s difficult to recover.

The choice is clear: build for trust today, or spend years catching up.